![]() In this chapter, we will examine the types of clustering available in Exchange Server 2003, the process of creating and managing Exchange clusters, and also configuring Exchange servers in a front-end/back-end arrangement.

In this chapter, we will examine the types of clustering available in Exchange Server 2003, the process of creating and managing Exchange clusters, and also configuring Exchange servers in a front-end/back-end arrangement.

Clustering Concepts

Clustering Concepts

![]() Clustering is not a feature of Exchange Server 2003 itself but instead a feature provided by the operating system: Windows 2000 Server or Windows Server 2003. Exchange, however, is clustering aware, meaning that it can recognize that is being installed into a cluster and will configure itself appropriately during the installation to support operation in this environment. Before we get any further into working with Exchange clusters, we need to first step back and examine the types of clustering available in Windows Server 2003 and then discuss basic clustering models and terminology. This will lead the way into creating and managing Exchange clusters later in this chapter.

Clustering is not a feature of Exchange Server 2003 itself but instead a feature provided by the operating system: Windows 2000 Server or Windows Server 2003. Exchange, however, is clustering aware, meaning that it can recognize that is being installed into a cluster and will configure itself appropriately during the installation to support operation in this environment. Before we get any further into working with Exchange clusters, we need to first step back and examine the types of clustering available in Windows Server 2003 and then discuss basic clustering models and terminology. This will lead the way into creating and managing Exchange clusters later in this chapter.

Clustering Methods

Clustering Methods

![]() Windows Server 2003 provides support for two different clustering methods: Network Load Balancing and the Microsoft Clustering Service. Each of these methods has different purposes and, as you might expect, different hardware requirements.

Windows Server 2003 provides support for two different clustering methods: Network Load Balancing and the Microsoft Clustering Service. Each of these methods has different purposes and, as you might expect, different hardware requirements.

Network Load Balancing

![]() Network Load Balancing (NLB) is the simpler of the two clustering methods to understand, and thus we will examine it first. Network Load Balancing is installed on all participating members as an additional network interface driver that uses a mathematical algorithm to equally distribute incoming requests to all members of the NLB cluster. Other than having two network interfaces installed in each NLB cluster member, there are no special hardware requirements to implement Network Load Balancing. Incoming client requests are assigned to cluster members based on their current loading level but can be modified through the use of filtering and an affinity for applications that require session state data to be maintained, such as an e-commerce application that uses cookies to place items in a shopping cart for purchase.

Network Load Balancing (NLB) is the simpler of the two clustering methods to understand, and thus we will examine it first. Network Load Balancing is installed on all participating members as an additional network interface driver that uses a mathematical algorithm to equally distribute incoming requests to all members of the NLB cluster. Other than having two network interfaces installed in each NLB cluster member, there are no special hardware requirements to implement Network Load Balancing. Incoming client requests are assigned to cluster members based on their current loading level but can be modified through the use of filtering and an affinity for applications that require session state data to be maintained, such as an e-commerce application that uses cookies to place items in a shopping cart for purchase.

| Note |

|

![]() The members of the NLB cluster are kept aware of one another’s existence (and thus their operational state) by the use of a special communication among members that is referred to as a heartbeat. If a cluster member stops sending out heartbeat messages for a specified period of time, it is assumed to no longer be responding to client requests, and thus a process known as convergence occurs. During the process of convergence, the remaining cluster members determine who is still available and distribute incoming requests accordingly. In Network Load Balancing, heartbeats are used only to track whether a server is responding to the heartbeat—it may not be able to respond to client requests, but if it still sends out a heartbeat, the cluster will consider it active and available.

The members of the NLB cluster are kept aware of one another’s existence (and thus their operational state) by the use of a special communication among members that is referred to as a heartbeat. If a cluster member stops sending out heartbeat messages for a specified period of time, it is assumed to no longer be responding to client requests, and thus a process known as convergence occurs. During the process of convergence, the remaining cluster members determine who is still available and distribute incoming requests accordingly. In Network Load Balancing, heartbeats are used only to track whether a server is responding to the heartbeat—it may not be able to respond to client requests, but if it still sends out a heartbeat, the cluster will consider it active and available.

![]() Because all members of an NLB cluster are considered to be equal to one another, a client’s initial connection may be made to any available cluster member. This sort of arrangement works very well for services such as websites, FTP servers, and VPN servers but does not work well when clients must connect to a specific Exchange server that hosts their mailboxes. Members of NLB clusters do not require any specialized storage devices, nor do they need to be members of a domain in order to be configured as part of the NLB cluster. Windows Server 2003 provides for up to 32 nodes in a Network Load Balancing cluster. Exchange Server 2003, however, does not support operation on a Network Load Balancing cluster.

Because all members of an NLB cluster are considered to be equal to one another, a client’s initial connection may be made to any available cluster member. This sort of arrangement works very well for services such as websites, FTP servers, and VPN servers but does not work well when clients must connect to a specific Exchange server that hosts their mailboxes. Members of NLB clusters do not require any specialized storage devices, nor do they need to be members of a domain in order to be configured as part of the NLB cluster. Windows Server 2003 provides for up to 32 nodes in a Network Load Balancing cluster. Exchange Server 2003, however, does not support operation on a Network Load Balancing cluster.

Microsoft Clustering Service

![]() The Microsoft Clustering Service (MSCS) is the second clustering method available in Windows Server 2003, and it is the only one that Exchange Server 2003 supports. MSCS provides for highly available server solutions through a process known as failover. An MSCS cluster consists of two more nodes (members) that are configured such that upon the failure of one node, any of the remaining cluster nodes can transfer the failed node’s resources to itself, thus keeping the resources available for client access. During this time, clients will see little, if any, interruption in service as the resources failover from the nonresponsive node to a remaining functional node.

The Microsoft Clustering Service (MSCS) is the second clustering method available in Windows Server 2003, and it is the only one that Exchange Server 2003 supports. MSCS provides for highly available server solutions through a process known as failover. An MSCS cluster consists of two more nodes (members) that are configured such that upon the failure of one node, any of the remaining cluster nodes can transfer the failed node’s resources to itself, thus keeping the resources available for client access. During this time, clients will see little, if any, interruption in service as the resources failover from the nonresponsive node to a remaining functional node.

![]() Much the same as Network Load Balanced clusters, MSCS clusters rely on a heartbeat to keep all cluster members apprised of the current status of every other member. Unlike Network Load Balancing, however, the Microsoft Clustering Service is service aware, meaning that it can monitor individual services on a member, not just the member as an overall entity. You will see how this comes into play when we examine creating Exchange virtual servers later in this chapter. Also, unlike Network Load Balancing, clustering using MSCS has very high hardware requirements, including the use of a shared storage device to which all nodes have equal access. Clustering using MSCS is especially useful in applications that use large database files, such as Exchange Server or SQL Server, where it is impossible for more than one node to have the database open at a time. Hence, when a member of an MSCS cluster fails, the resources on that failed member are immediately transferred to another (operating) member of the cluster through the failover process.

Much the same as Network Load Balanced clusters, MSCS clusters rely on a heartbeat to keep all cluster members apprised of the current status of every other member. Unlike Network Load Balancing, however, the Microsoft Clustering Service is service aware, meaning that it can monitor individual services on a member, not just the member as an overall entity. You will see how this comes into play when we examine creating Exchange virtual servers later in this chapter. Also, unlike Network Load Balancing, clustering using MSCS has very high hardware requirements, including the use of a shared storage device to which all nodes have equal access. Clustering using MSCS is especially useful in applications that use large database files, such as Exchange Server or SQL Server, where it is impossible for more than one node to have the database open at a time. Hence, when a member of an MSCS cluster fails, the resources on that failed member are immediately transferred to another (operating) member of the cluster through the failover process.

![]() Clusters using the Microsoft Clustering Service can be created in one of two clustering modes: Active/Active and Active/Passive. When using Exchange Server 2003 on Windows Server 2003 Enterprise Edition or Datacenter Edition, you can create up to eight-node Active/Passive clusters. When creating an Active/Active Exchange Server 2003 cluster, you can have only two nodes. We will examine these two clustering modes shortly, but before we get to that we need to examine some basic clustering terminology that will make the rest of this discussion easier to follow.

Clusters using the Microsoft Clustering Service can be created in one of two clustering modes: Active/Active and Active/Passive. When using Exchange Server 2003 on Windows Server 2003 Enterprise Edition or Datacenter Edition, you can create up to eight-node Active/Passive clusters. When creating an Active/Active Exchange Server 2003 cluster, you can have only two nodes. We will examine these two clustering modes shortly, but before we get to that we need to examine some basic clustering terminology that will make the rest of this discussion easier to follow.

| Note |

|

Clustering Terminology

Clustering Terminology

![]() It seems that everything related to the field of information technology has its own special set of acronyms and buzzwords that you must understand in order to work with, and discuss, the specific technology at hand. Clustering is no different, and it is perhaps one of the more esoteric fields when it comes to terms you must understand. A thorough understanding of the following basic clustering terms will serve you well through the rest of this chapter and in your duties as an Exchange administrator. It probably won’t hurt too much for the exam experience either.

It seems that everything related to the field of information technology has its own special set of acronyms and buzzwords that you must understand in order to work with, and discuss, the specific technology at hand. Clustering is no different, and it is perhaps one of the more esoteric fields when it comes to terms you must understand. A thorough understanding of the following basic clustering terms will serve you well through the rest of this chapter and in your duties as an Exchange administrator. It probably won’t hurt too much for the exam experience either.

![]() Cluster A cluster is a group of two or more servers that act together as a single, larger resource.

Cluster A cluster is a group of two or more servers that act together as a single, larger resource.

![]() Cluster resource A cluster resource is a service or property, such as a storage device, an IP address, or the Exchange System Attendant service, that is defined, monitored, and managed by the cluster service.

Cluster resource A cluster resource is a service or property, such as a storage device, an IP address, or the Exchange System Attendant service, that is defined, monitored, and managed by the cluster service.

![]() Virtual server A virtual server is an instance of the application running on a cluster node (Exchange Server 2003 in this case) that uses a set of cluster resources. Virtual servers may also be referred to as cluster resource groups.

Virtual server A virtual server is an instance of the application running on a cluster node (Exchange Server 2003 in this case) that uses a set of cluster resources. Virtual servers may also be referred to as cluster resource groups.

![]() Failover Failover is the process of moving resources off a cluster node that has failed to another cluster node. If any of the cluster resources on an active node becomes unresponsive or unavailable for a period of time exceeding the configured threshold, failover will occur.

Failover Failover is the process of moving resources off a cluster node that has failed to another cluster node. If any of the cluster resources on an active node becomes unresponsive or unavailable for a period of time exceeding the configured threshold, failover will occur.

![]() Failback Failback is the process of cluster resources moving back to their preferred node after the preferred node has resumed active membership in the cluster.

Failback Failback is the process of cluster resources moving back to their preferred node after the preferred node has resumed active membership in the cluster.

![]() Node A node is an individual member of a cluster, otherwise referred to as a cluster node, cluster member, member, or cluster server.

Node A node is an individual member of a cluster, otherwise referred to as a cluster node, cluster member, member, or cluster server.

![]() Quorum disk A quorum disk is the disk set that contains definitive cluster configuration data. All members of an MSCS cluster must have continuous, reliable access to the data that is contained on a quorum disk. Information contained on the quorum disk includes data about the nodes that are participating in the cluster, the applications and resources that are defined within the cluster, and the current status of each member, application, and resource. Quorum disks represent one of the specialized hardware requirements of clusters and are typically placed on a large shared storage device.

Quorum disk A quorum disk is the disk set that contains definitive cluster configuration data. All members of an MSCS cluster must have continuous, reliable access to the data that is contained on a quorum disk. Information contained on the quorum disk includes data about the nodes that are participating in the cluster, the applications and resources that are defined within the cluster, and the current status of each member, application, and resource. Quorum disks represent one of the specialized hardware requirements of clusters and are typically placed on a large shared storage device.

Clustering Modes

Clustering Modes

![]() Recall from our earlier discussion that clustering uses a group of between two and eight servers, all tied to a common shared storage device to create a highly available solution for clients. Clustering considers applications, services, IP addresses, and hardware devices to all be resources that it monitors to determine the health status of the cluster and its members. When a problem with a resource is first noted, the Microsoft Clustering Service will first attempt to correct the problem on that cluster node. An example of this might be attempting to restart a service that has stopped. If the problem with the resource cannot be corrected within a specific amount of time, the clustering service will fail the resource, take the affected virtual server offline, and then move it to another functioning node in the cluster. After the move, the clustering service will restart the virtual server so that it can begin servicing client requests on the new node.

Recall from our earlier discussion that clustering uses a group of between two and eight servers, all tied to a common shared storage device to create a highly available solution for clients. Clustering considers applications, services, IP addresses, and hardware devices to all be resources that it monitors to determine the health status of the cluster and its members. When a problem with a resource is first noted, the Microsoft Clustering Service will first attempt to correct the problem on that cluster node. An example of this might be attempting to restart a service that has stopped. If the problem with the resource cannot be corrected within a specific amount of time, the clustering service will fail the resource, take the affected virtual server offline, and then move it to another functioning node in the cluster. After the move, the clustering service will restart the virtual server so that it can begin servicing client requests on the new node.

![]() Windows Server 2003 and Exchange Server 2003 support two different clustering modes, as discussed next.

Windows Server 2003 and Exchange Server 2003 support two different clustering modes, as discussed next.

Active/Passive Clustering

![]() When Active/Passive clustering is used, a cluster can contain between two and eight nodes. At least one node must be active and at least one node must be passive. You can have any other combination as well, such as a six-node Active/Passive cluster that has four active nodes and two passive nodes. The active nodes are online actively providing the clustered resources to clients. The passive nodes must sit idle, not being used to provide services of any kind to clients. Should an active node need to be failed, the clustering service can then transfer the virtual server from the failed node to the previously passive node. The passive node’s operational state is changed to active, and it can now begin to service client requests for the clustered resource. Since any passive node may have to pick up the load of any active node, all active and passive nodes in the cluster should be configured identically both in hardware and software. As you will see in our discussion of Active/Active clustering next, the Active/Passive clustering mode is the preferred mode of operation for Exchange Server clusters.

When Active/Passive clustering is used, a cluster can contain between two and eight nodes. At least one node must be active and at least one node must be passive. You can have any other combination as well, such as a six-node Active/Passive cluster that has four active nodes and two passive nodes. The active nodes are online actively providing the clustered resources to clients. The passive nodes must sit idle, not being used to provide services of any kind to clients. Should an active node need to be failed, the clustering service can then transfer the virtual server from the failed node to the previously passive node. The passive node’s operational state is changed to active, and it can now begin to service client requests for the clustered resource. Since any passive node may have to pick up the load of any active node, all active and passive nodes in the cluster should be configured identically both in hardware and software. As you will see in our discussion of Active/Active clustering next, the Active/Passive clustering mode is the preferred mode of operation for Exchange Server clusters.

| Note |

|

Active/Active Clustering

![]() When Active/Active clustering is used, each node in the cluster runs one instance of the clustered service. Should a failure of the clustered service occur, that instance is transferred to the other active node. Although you will be able to use both nodes of the cluster in Active/Active mode, you are limited in how many storage groups and Exchange mailboxes each node can host.

When Active/Active clustering is used, each node in the cluster runs one instance of the clustered service. Should a failure of the clustered service occur, that instance is transferred to the other active node. Although you will be able to use both nodes of the cluster in Active/Active mode, you are limited in how many storage groups and Exchange mailboxes each node can host.

![]() A single Exchange Server 2003 Enterprise Edition server is limited to a maximum of four storage groups. Should one of the nodes fail, the single remaining node will now have to be tasked with providing all of the storage from both of the nodes. If one node had three storage groups and the second node had two storage groups, one storage group would not be able to be started on the remaining operational node should one node fail. In addition to these restrictions, the nodes in an Active/Active cluster may have only 1,900 active mailboxes each, which is several thousand fewer than an Exchange server might typically be able to hold.

A single Exchange Server 2003 Enterprise Edition server is limited to a maximum of four storage groups. Should one of the nodes fail, the single remaining node will now have to be tasked with providing all of the storage from both of the nodes. If one node had three storage groups and the second node had two storage groups, one storage group would not be able to be started on the remaining operational node should one node fail. In addition to these restrictions, the nodes in an Active/Active cluster may have only 1,900 active mailboxes each, which is several thousand fewer than an Exchange server might typically be able to hold.

Cluster Models

Cluster Models

![]() After determining the cluster mode that you will use, you still have more choices ahead of you. The Microsoft Clustering Service offers you three different clustering models to choose from. The clustering model you choose dictates the storage requirements and configuration of your new cluster, so you will want to choose wisely and only after giving it adequate thought and planning. We will examine each of the three available models next.

After determining the cluster mode that you will use, you still have more choices ahead of you. The Microsoft Clustering Service offers you three different clustering models to choose from. The clustering model you choose dictates the storage requirements and configuration of your new cluster, so you will want to choose wisely and only after giving it adequate thought and planning. We will examine each of the three available models next.

| Note |

|

Single Node Cluster

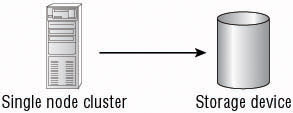

![]() The single node cluster is the simplest cluster model to implement. This model has one cluster node that uses either local storage or an external storage device, as depicted in Figure 4.1. Although this model does not offer all of the benefits of the single quorum cluster, discussed next, it should not be overlooked because it does still provide some benefits to the organization interested in clustering Exchange Server 2003.

The single node cluster is the simplest cluster model to implement. This model has one cluster node that uses either local storage or an external storage device, as depicted in Figure 4.1. Although this model does not offer all of the benefits of the single quorum cluster, discussed next, it should not be overlooked because it does still provide some benefits to the organization interested in clustering Exchange Server 2003.

![]() Although you’d never want to use a single node cluster model to provide highly available Exchange resources to clients, it can be used to develop and test cluster processes and procedures for an organization. The single node cluster still retains clustering ability to attempt to restart failed services; thus it mimics a production cluster in this way. You may also consider creating a single node cluster using an external storage device as a means to prestage for a larger cluster solution. You will simply need to join the additional nodes to the existing cluster and configure the desired policies for the cluster.

Although you’d never want to use a single node cluster model to provide highly available Exchange resources to clients, it can be used to develop and test cluster processes and procedures for an organization. The single node cluster still retains clustering ability to attempt to restart failed services; thus it mimics a production cluster in this way. You may also consider creating a single node cluster using an external storage device as a means to prestage for a larger cluster solution. You will simply need to join the additional nodes to the existing cluster and configure the desired policies for the cluster.

| Note |

|

Single Quorum Cluster

![]() The single quorum cluster, seen in Figure 4.2, is the standard clustering model that comes to mind when most people think of clustering. In the single quorum model, all nodes are attached to the external shared storage device. All cluster configuration data is kept on this storage device; thus all cluster nodes have access to the quorum data. The single quorum model is used to create Active/Active and Active/Passive clusters that are located in a single location, such as within a single server room.

The single quorum cluster, seen in Figure 4.2, is the standard clustering model that comes to mind when most people think of clustering. In the single quorum model, all nodes are attached to the external shared storage device. All cluster configuration data is kept on this storage device; thus all cluster nodes have access to the quorum data. The single quorum model is used to create Active/Active and Active/Passive clusters that are located in a single location, such as within a single server room.

Majority Node Set Cluster

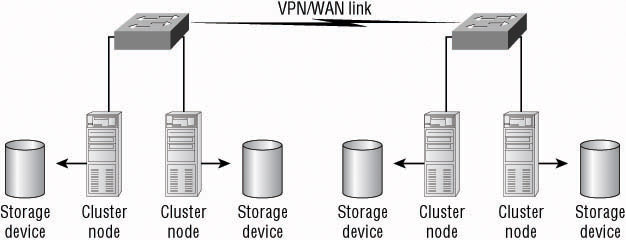

![]() The majority node set cluster model, seen in Figure 4.3, is a new high-end clustering model first available in Windows Server 2003 that allows for two or more cluster nodes to be configured so that multiple storage devices can be used. The cluster configuration data is kept on all disks across the cluster, and the Microsoft Clustering Service is responsible for keeping this data up-to-date. Although this model has the advantage of being able to locate cluster nodes in different geographical locations, it is a very complex and costly model to implement.

The majority node set cluster model, seen in Figure 4.3, is a new high-end clustering model first available in Windows Server 2003 that allows for two or more cluster nodes to be configured so that multiple storage devices can be used. The cluster configuration data is kept on all disks across the cluster, and the Microsoft Clustering Service is responsible for keeping this data up-to-date. Although this model has the advantage of being able to locate cluster nodes in different geographical locations, it is a very complex and costly model to implement.

![]() Majority node cluster sets are likely the future of advanced clustering; however, they are not right for everyone. A maximum of two well-connected (500 ms or less latency) sites can be used to create a majority node set. As well, Microsoft currently recommends that you implement majority node set clustering only in very specific instances and only with close support provided by your original equipment manufacturer, independent software vendor, or independent hardware vendor.

Majority node cluster sets are likely the future of advanced clustering; however, they are not right for everyone. A maximum of two well-connected (500 ms or less latency) sites can be used to create a majority node set. As well, Microsoft currently recommends that you implement majority node set clustering only in very specific instances and only with close support provided by your original equipment manufacturer, independent software vendor, or independent hardware vendor.

| Note |

|

Cluster Operation Modes

Cluster Operation Modes

![]() Having gotten this far into the discussion about clustering, there is one more item you must consider when preparing to implement a clustered solution: what mode of cluster operation you wish to use.

Having gotten this far into the discussion about clustering, there is one more item you must consider when preparing to implement a clustered solution: what mode of cluster operation you wish to use.

| Note |

|

![]() When discussing cluster operation modes, you’re really talking about how cluster nodes will react when a failover situation occurs, i.e., how the node will failover to an available cluster node. The four modes of cluster operation that you can choose from are the following:

When discussing cluster operation modes, you’re really talking about how cluster nodes will react when a failover situation occurs, i.e., how the node will failover to an available cluster node. The four modes of cluster operation that you can choose from are the following:

| Note |

|

![]() Failover pair When the failover pair is configured, resources are configured to failover between two specific cluster nodes. This is accomplished by listing only these two specific nodes in the Possible Owners list for the resources of concern. This mode of operation could be used in either Active/Active or Active/Passive clustering.

Failover pair When the failover pair is configured, resources are configured to failover between two specific cluster nodes. This is accomplished by listing only these two specific nodes in the Possible Owners list for the resources of concern. This mode of operation could be used in either Active/Active or Active/Passive clustering.

![]() Hot standby When hot standby is configured, a passive node can take on the resources of any failed active node. Hot standby is really just another term for Active/Passive, as discussed earlier in this section. You configure for hot standby through a combination of using the Preferred Owners list and the Possible Owners list. The preferred node is configured in the Preferred Owners list and designated as the node that will run the application or service under normal conditions. The spare (hot standby) node is configured in the Possible Owners list. As an example, in a six-node Active/Passive cluster, two of the active nodes might point to one passive node as a possible owner and the other two active nodes might point to the other passive node as a possible owner.

Hot standby When hot standby is configured, a passive node can take on the resources of any failed active node. Hot standby is really just another term for Active/Passive, as discussed earlier in this section. You configure for hot standby through a combination of using the Preferred Owners list and the Possible Owners list. The preferred node is configured in the Preferred Owners list and designated as the node that will run the application or service under normal conditions. The spare (hot standby) node is configured in the Possible Owners list. As an example, in a six-node Active/Passive cluster, two of the active nodes might point to one passive node as a possible owner and the other two active nodes might point to the other passive node as a possible owner.

![]() Failover ring The failover ring mode, also referred to as Active/Active as discussed previously, has each node in the cluster running an instance of the application or resource being clustered. When one node fails, the clustered resource is moved to the next node in the sequence. In Exchange clusters, this mode of operation is not useful because these resources have nowhere else to failover to but the single remaining node in a two-node Active/Active cluster.

Failover ring The failover ring mode, also referred to as Active/Active as discussed previously, has each node in the cluster running an instance of the application or resource being clustered. When one node fails, the clustered resource is moved to the next node in the sequence. In Exchange clusters, this mode of operation is not useful because these resources have nowhere else to failover to but the single remaining node in a two-node Active/Active cluster.

![]() Random failover When random failover is configured, the clustered resource will be randomly failed over to an available cluster node. The random failover mode is configured by providing an empty Preferred Owners list for each virtual server.

Random failover When random failover is configured, the clustered resource will be randomly failed over to an available cluster node. The random failover mode is configured by providing an empty Preferred Owners list for each virtual server.

![]() A failover policy is what the cluster service uses to determine how to react when a clustered resource is unavailable. A failover policy consists of three items:

A failover policy is what the cluster service uses to determine how to react when a clustered resource is unavailable. A failover policy consists of three items:

-

A list of preferred nodes. This list designates the order in which the resource should be failed over to other remaining nodes in the cluster.

A list of preferred nodes. This list designates the order in which the resource should be failed over to other remaining nodes in the cluster. -

Failover threshold and timing. You can configure the clustering service to immediately failover the resource if the resource fails or to first attempt to restart it a specific number of times within a specified amount of time.

Failover threshold and timing. You can configure the clustering service to immediately failover the resource if the resource fails or to first attempt to restart it a specific number of times within a specified amount of time. -

Failback time constraints. Because it might not always be in your best interests to have resources failing back to their original nodes automatically, you can configure how and when failback is to occur.

Failback time constraints. Because it might not always be in your best interests to have resources failing back to their original nodes automatically, you can configure how and when failback is to occur.

0 comments

Post a Comment